Scoring latency for models with different tree counts and tree levels... | Download Scientific Diagram

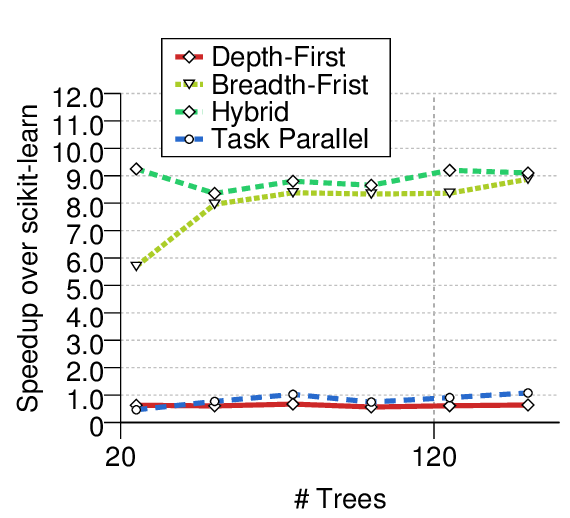

Speedup relative to scikit-learn over varying numbers of trees when... | Download Scientific Diagram

H2O.ai Releases H2O4GPU, the Fastest Collection of GPU Algorithms on the Market, to Expedite Machine Learning in Python | H2O.ai

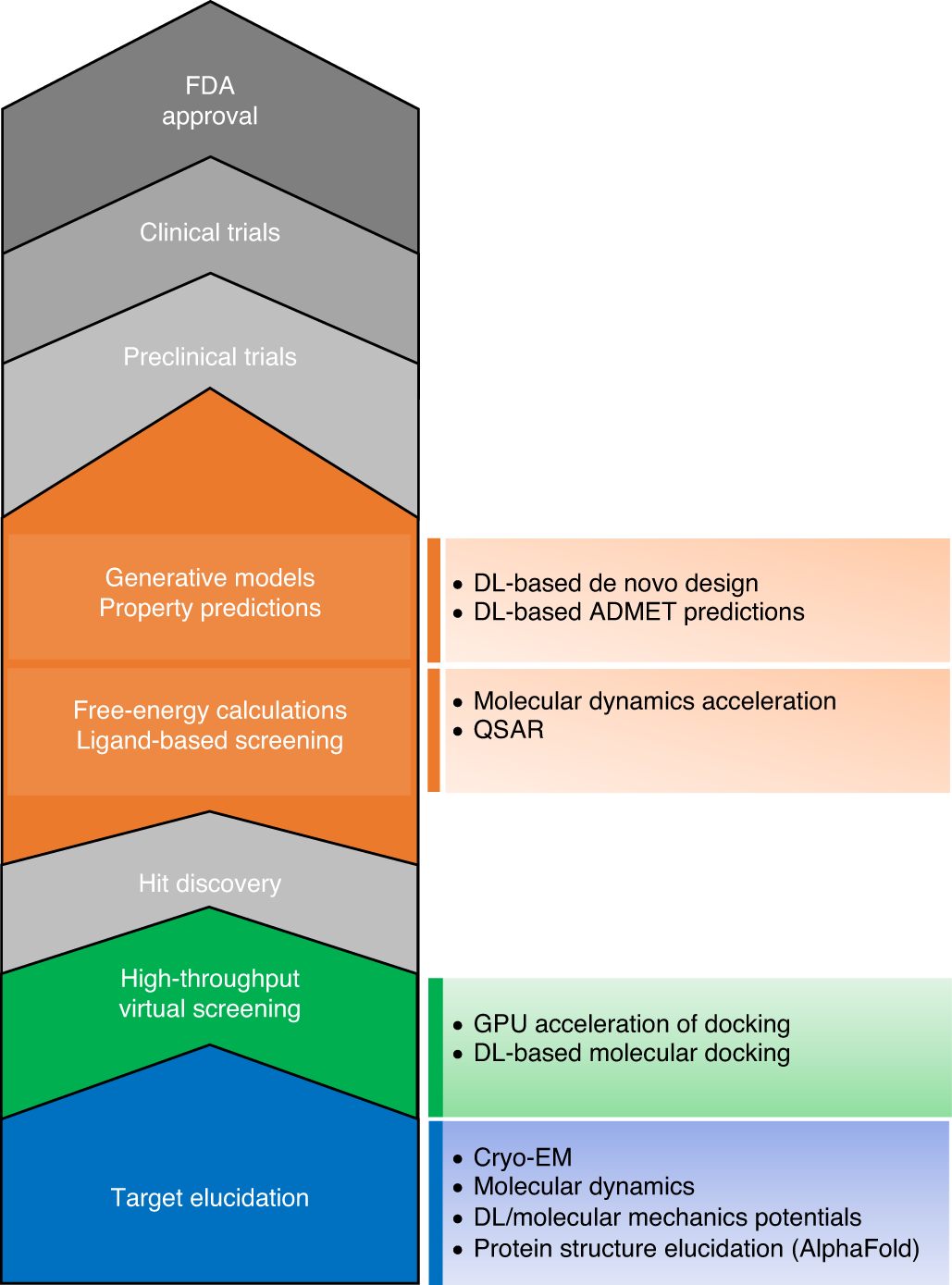

The transformational role of GPU computing and deep learning in drug discovery | Nature Machine Intelligence

Speedup relative to scikit-learn on varying numbers of features on a... | Download Scientific Diagram

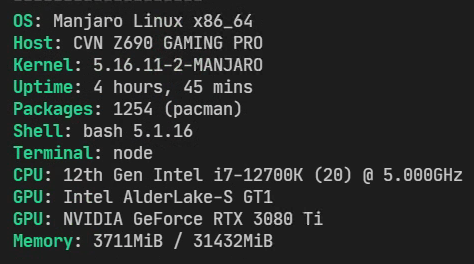

Random segfault training with scikit-learn on Intel Alder Lake CPU platform - vision - PyTorch Forums